The JEDEC Association has released preliminary specifications for the next generation of high-bandwidth memory (HBM4), setting the stage for a significant leap in memory technology. While HBM4 features a lower data transfer rate compared to its predecessor, HBM3E, it boasts a 2048-bit interface per stack and supports a wider range of memory layers, making it ideal for demanding applications.

A New Era of Memory

The HBM4 standard introduces 24 Gb and 32 Gb layers, offering configurations ranging from 4-high to 16-high TSV stacks. This flexibility allows for various memory configurations, catering to a wider range of applications.

The committee has initially set the speed at up to 6.4 GT/s, with ongoing discussions to potentially achieve even higher data transfer rates. A 16-Hi stack based on 32 Gb layers will provide a staggering 64 GB capacity, enabling processors with four memory modules to support a whopping 256 GB of memory with a peak bandwidth of 6.56 TB/s using an 8,192-bit interface.

Compatibility and Integration

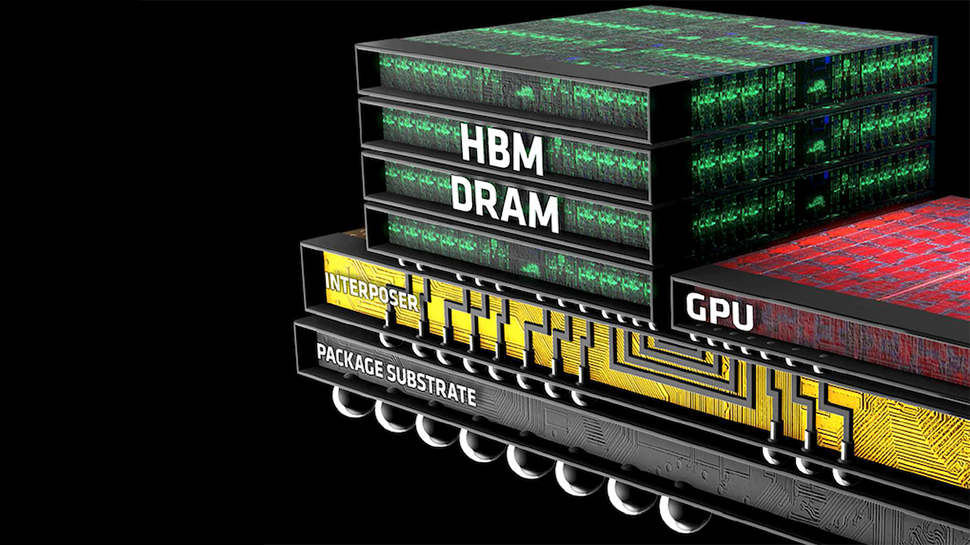

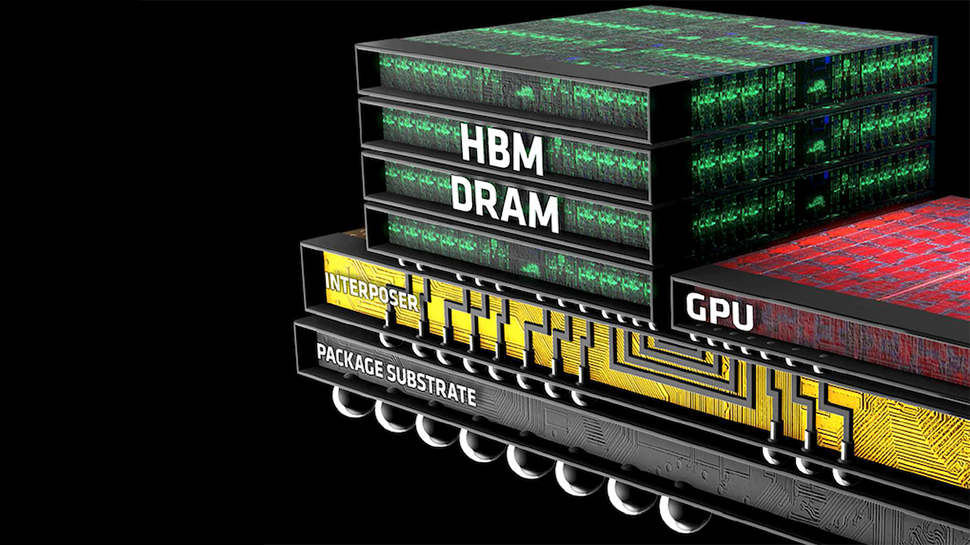

While HBM4 doubles the channel count per stack compared to HBM3 and has a larger footprint, ensuring backward compatibility, a single controller can handle both HBM3 and HBM4. However, different interposers will be required to accommodate the varying footprints. Notably, JEDEC has not yet announced plans for integrating HBM4 memory directly onto processors, making this a highly intriguing aspect of the technology's future.

A Collaborative Effort

Earlier this year, SK hynix and TSMC joined forces to develop HBM4 base dies. TSMC has confirmed it will leverage its 12FFC+ (12nm-class) and N5 (5nm-class) process technologies for manufacturing these dies. TSMC's N5 process, renowned for its advanced logic integration and interconnection pitches, is crucial for on-die integration. Meanwhile, the 12FFC+ process, derived from TSMC's 16nm FinFET technology, will enable the production of cost-effective base dies that connect memory to host processors through silicon interposers.

Targeting High-Performance Computing

HBM4 is primarily designed to meet the increasing demands of generative AI and high-performance computing. These applications require efficient handling of vast datasets and complex computations, making HBM4 a perfect fit. As a result, its use in client applications like graphics cards is unlikely.

The release of the HBM4 preliminary specifications marks a significant step towards the future of memory technology. With its high bandwidth, scalability, and potential for integration, HBM4 is poised to revolutionize the way we process and manage data, particularly in the rapidly growing fields of AI and high-performance computing.

Article

Technology

HBM4: The Next Generation of High-Bandwidth Memory