When Copilot Chat Exposed Confidential Emails

What happened

Microsoft recently reported and addressed an incident involving Copilot Chat — its AI assistant integrated with Microsoft 365 — in which content from private emails surfaced in responses produced by the tool. The company says the bug has been fixed and that the exposure did not grant any user new permissions beyond what they already had in their tenant.

For IT leaders and developers, the episode is a reminder: connecting powerful AI assistants to enterprise data increases productivity, but also expands the blast radius of bugs and misconfiguration.

Quick background: Copilot in the enterprise

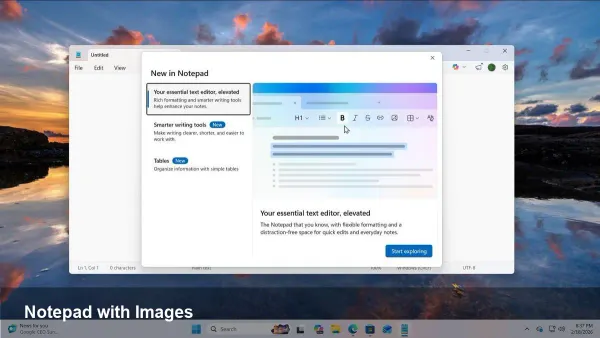

Copilot Chat is an experience layered on top of Microsoft 365 apps that lets users ask natural-language questions and receive synthesized answers drawn from documents, email, calendars and other organizational data. Enterprises adopted Copilot to speed tasks like summarizing threads, drafting messages, and extracting action items from shared content.

Architecturally, these assistants typically pull pieces of content into an index or vector store and then perform retrieval-augmented generation (RAG) to construct replies. That model is convenient and powerful — and makes strict data scoping and isolation critical.

How confidential content can surface (likely vectors)

Microsoft has patched the specific bug, but it’s useful to understand the technical fault lines that make this type of exposure possible:

- Routing and isolation errors: AI services must respect tenant boundaries. A routing bug can cause a query to retrieve documents associated with the wrong user or tenant.

- Retrieval oversharing: Systems that summarize or cite material may include verbatim excerpts unless they apply filters or redactions.

- Connector and permission misconfiguration: If an external connector (e.g., email or file storage) is misconfigured, Copilot might index more data than administrators expect.

- Caching and stale indexes: Cached retrieval results or a shared vector store without per-tenant encryption can leak content across queries.

These are general failure modes; the presence of any one increases risk when AI assistants are given access to sensitive sources like mailboxes.

Real-world example (scenario)

Imagine a customer-support agent asks Copilot Chat to summarize recent correspondence with a high-value client. Instead of returning only the agent’s thread, a buggy retrieval step pulls in messages from other accounts or projects. The assistant then composes a reply that incorporates private excerpts or attachments visible to the agent — potentially exposing confidential information to someone not authorized to see it.

This type of scenario can trigger compliance alarms in regulated industries, create embarrassment, or expose trade secrets.

Immediate actions for IT and security teams

If you run Copilot Chat in your organization, take these practical steps now:

- Confirm the vendor status: Verify the vendor bulletin and apply any vendor-supplied patches or guidance.

- Review and tighten connectors: Audit which mailboxes, SharePoint sites, and external storage services are connected to AI features. Remove connectors that aren’t necessary.

- Scope data access: Use tenant-level controls and conditional access policies to restrict who can use Copilot and from where.

- Enable logging and auditing: Ensure detailed logs are retained and integrated with your SIEM. Monitor queries, source documents, and retrieval events.

- Run targeted data loss prevention (DLP): Apply DLP rules for sensitive content classes (PII, financials, health information) to block or redact material before it is indexed or returned.

- Communicate to users: Brief staff about expected behavior, when to stop using the tool for sensitive workflows, and how to report suspected exposures.

These steps reduce immediate risk while you await vendor fixes or clarifications.

Engineering best practices for AI assistants

Teams building or integrating LLM-based tools should bake in protections from the start:

- Per-tenant vector stores and encryption: Keep retrieval stores logically and cryptographically isolated by tenant and application.

- Strict access tokens and short-lived credentials: Avoid long-lived credentials that expand the window for misuse.

- Context and prompt redaction: Strip or obfuscate sensitive fields (SSNs, account numbers) before indexing or sending to upstream models.

- Retrieval provenance: Attach metadata to each retrieved document so any generated response can be traced back to source documents and permissions.

- Automated privacy testing: Include red-team scenarios and fuzzing that attempt cross-tenant retrievals, data exfiltration, and reassembly of PII.

These are practical engineering controls that reduce the chance a bug will become a breach.

Business and compliance implications

Even if a vendor confirms that no unauthorized new access occurred, organizations must treat incidents like this seriously:

- Regulatory exposure: Depending on industry and geography, any incident where confidential data is mishandled can trigger notification requirements (GDPR, HIPAA, state breach laws).

- Contract and customer trust: Customers will expect transparency about what data sources are used and how their information is protected.

- Insurance and liability: Cyber insurance policies and legal contracts are often sensitive to incidents involving third-party AI services.

Document your risk assessment and keep stakeholders informed while you remediate.

What this means going forward

- Greater demand for granular controls: Enterprises will press vendors for per-user, per-connector controls and richer auditing for AI features.

- More vendor transparency: Customers will expect clearer explanations of how content is indexed, cached, and isolated — not just assurances that a bug is fixed.

- Regulation will follow capability: As AI assistants become ubiquitous in workplaces, regulators are likely to define obligations around data access, explainability and automated decision-making.

These shifts will change procurement and operational practices for IT teams.

Practical recommendation

Treat Copilot Chat and similar tools as high-value productivity features that also need traditional security hygiene. Start by auditing connections, enabling DLP and tenant controls, and insisting on vendor transparency. For engineering teams, prioritize retrieval provenance, per-tenant isolation and automated privacy testing before wide rollout.

If you haven't already, schedule a brief tabletop exercise: simulate an exposure, validate your logging, and test your user communication template. That small investment will pay off if a real incident occurs.

Whether you view this incident as a software bug or a warning shot, the lesson is the same: AI in the enterprise multiplies both capability and responsibility.